* **MCP Context Wiring** - Added a reusable context payload and env constant so every attempt can describe itself as JSON (`crates/utils/src/vk_mcp_context.rs:1`). - Threaded that payload through executor launches via a new `ExecutorRuntimeContext`, pushing `VK_MCP_CONTEXT_JSON` into each agent process (e.g. `crates/executors/src/actions/mod.rs:20`, `crates/executors/src/executors/claude.rs:158`, `crates/executors/src/executors/acp/harness.rs:60`). - When an attempt starts we now build the context blob from project/task/attempt metadata and pass it to every execution (initial, follow-up, cleanup) (`crates/local-deployment/src/container.rs:823`). - The MCP task server reads the env, keeps the parsed struct, and only exposes a new `get_vk_context` tool when the blob is present; instructions call it out conditionally (`crates/server/src/mcp/task_server.rs:240`, `crates/server/src/mcp/task_server.rs:397`, `crates/server/src/mcp/task_server.rs:622`). Tests: `cargo check` You may want to sanity-test an executor path (e.g., start a Claude attempt and invoke `get_vk_context`) to see the live payload returned. - Kept the prompt plumbing lean: `ExecutorRuntimeContext` is now just an optional JSON string, and coding actions hand it to each agent via the new `use_runtime_context` hook instead of changing every `spawn` signature (`crates/executors/src/actions/mod.rs:20`, `crates/executors/src/actions/coding_agent_initial.rs:33`). - Each coding executor caches that string and injects `VK_MCP_CONTEXT_JSON` when it actually builds the `Command`, so only MCP-aware runs see the env; the harness got a light touch so Gemini/Qwen can pass the optional blob too (`crates/executors/src/executors/claude.rs:188`, `crates/executors/src/executors/amp.rs:36`, `crates/executors/src/executors/acp/harness.rs:46`). - Container still assembles the per-attempt metadata, but now it just builds the JSON once and hands it along; the MCP server logic from before remains untouched. Tests: `cargo check` If you want to test end-to-end, spin up an attempt with the VK MCP server enabled and call the new `get_vk_context` tool—you should see the env-driven payload without any prompt changes. - Trimmed MCP context payload to only the essentials: just project id/name, task id/title, attempt id/branch/target branch, plus execution id and executor (`crates/utils/src/vk_mcp_context.rs:9`, `crates/local-deployment/src/container.rs:832`). - Dropped the fields you called out (repo path, description, status, worktree path, backend URL) so agents can’t wander outside the worktree or get flooded with long text (`crates/local-deployment/src/container.rs:834`). - Ran `cargo fmt` and `cargo check` to confirm the lighter struct compiles cleanly. All cleaned up—let me know if you want any other context tweaks. - Trimmed the MCP payload helpers to the bare minimum and added a simple `VkMcpContext::new` constructor for building the JSON without extra wrappers (`crates/utils/src/vk_mcp_context.rs:1`). - Local container now invokes that constructor and serializes directly with `serde_json::to_string`, no stray repo paths/status/URLs left (`crates/local-deployment/src/container.rs:836`). - MCP server reads the env blob with `serde_json::from_str`, avoiding the removed helper (`crates/server/src/mcp/task_server.rs:249`). `cargo check` You’re set to retest—context only contains the trimmed fields, and the code paths compile clean. - Simplified the MCP context to only include project/task/attempt IDs and branch info, with a single `VkMcpContext::new` helper (`crates/utils/src/vk_mcp_context.rs:7`). - Container now builds the context directly from the execution/task data without extra lookups or name fields, then serializes via `serde_json::to_string` (`crates/local-deployment/src/container.rs:826`). - MCP server reuses `serde_json::from_str` to hydrate the env payload—no custom helpers needed (`crates/server/src/mcp/task_server.rs:250`). `cargo check` All review notes addressed; context stays minimal and derived straight from the execution context. - Context constructor now takes everything from the `ExecutionContext` itself—no extra project fetch or manual process ID threading—so we pass `execution_ctx.execution_process.id` (`crates/local-deployment/src/container.rs:826`). - `VkMcpContext::new` remains the lightweight helper; no other fields changed. - `cargo check` still green. That should satisfy the review comment; let me know if you want the helper to move inside `ExecutionContext` itself later. You’re right to call this out. The problem is that I kept reconstructing the MCP payload by pulling individual fields out of `execution_ctx` instead of passing the whole context through a helper. That meant I was effectively duplicating the same data extraction in several spots, which is exactly what you asked me to avoid. Even after I added `VkMcpContext::new`, I was still feeding it a pile of individual values and even chasing the project row myself. The fix is straightforward: provide a helper that takes the database `ExecutionContext` (already returned by `ExecutionProcess::load_context`) and use that directly. I’ve added `VkMcpContext::from_execution(&execution_ctx)` and switched the container to call it, so we call `serde_json::to_string(VkMcpContext::from_execution(&execution_ctx))`. No extra project lookups, no manual argument lists, and the helper itself is exactly where you expect it. Let me know if you want to drop the old `new(...)` constructor now that the context-based helper exists. Simplify rebase fixes * **MCP Context Wiring** - Added a reusable context payload and env constant so every attempt can describe itself as JSON (`crates/utils/src/vk_mcp_context.rs:1`). - Threaded that payload through executor launches via a new `ExecutorRuntimeContext`, pushing `VK_MCP_CONTEXT_JSON` into each agent process (e.g. `crates/executors/src/actions/mod.rs:20`, `crates/executors/src/executors/claude.rs:158`, `crates/executors/src/executors/acp/harness.rs:60`). - When an attempt starts we now build the context blob from project/task/attempt metadata and pass it to every execution (initial, follow-up, cleanup) (`crates/local-deployment/src/container.rs:823`). - The MCP task server reads the env, keeps the parsed struct, and only exposes a new `get_vk_context` tool when the blob is present; instructions call it out conditionally (`crates/server/src/mcp/task_server.rs:240`, `crates/server/src/mcp/task_server.rs:397`, `crates/server/src/mcp/task_server.rs:622`). Tests: `cargo check` You may want to sanity-test an executor path (e.g., start a Claude attempt and invoke `get_vk_context`) to see the live payload returned. - Kept the prompt plumbing lean: `ExecutorRuntimeContext` is now just an optional JSON string, and coding actions hand it to each agent via the new `use_runtime_context` hook instead of changing every `spawn` signature (`crates/executors/src/actions/mod.rs:20`, `crates/executors/src/actions/coding_agent_initial.rs:33`). - Each coding executor caches that string and injects `VK_MCP_CONTEXT_JSON` when it actually builds the `Command`, so only MCP-aware runs see the env; the harness got a light touch so Gemini/Qwen can pass the optional blob too (`crates/executors/src/executors/claude.rs:188`, `crates/executors/src/executors/amp.rs:36`, `crates/executors/src/executors/acp/harness.rs:46`). - Container still assembles the per-attempt metadata, but now it just builds the JSON once and hands it along; the MCP server logic from before remains untouched. Tests: `cargo check` If you want to test end-to-end, spin up an attempt with the VK MCP server enabled and call the new `get_vk_context` tool—you should see the env-driven payload without any prompt changes. - Trimmed MCP context payload to only the essentials: just project id/name, task id/title, attempt id/branch/target branch, plus execution id and executor (`crates/utils/src/vk_mcp_context.rs:9`, `crates/local-deployment/src/container.rs:832`). - Dropped the fields you called out (repo path, description, status, worktree path, backend URL) so agents can’t wander outside the worktree or get flooded with long text (`crates/local-deployment/src/container.rs:834`). - Ran `cargo fmt` and `cargo check` to confirm the lighter struct compiles cleanly. All cleaned up—let me know if you want any other context tweaks. - Trimmed the MCP payload helpers to the bare minimum and added a simple `VkMcpContext::new` constructor for building the JSON without extra wrappers (`crates/utils/src/vk_mcp_context.rs:1`). - Local container now invokes that constructor and serializes directly with `serde_json::to_string`, no stray repo paths/status/URLs left (`crates/local-deployment/src/container.rs:836`). - MCP server reads the env blob with `serde_json::from_str`, avoiding the removed helper (`crates/server/src/mcp/task_server.rs:249`). `cargo check` You’re set to retest—context only contains the trimmed fields, and the code paths compile clean. - Simplified the MCP context to only include project/task/attempt IDs and branch info, with a single `VkMcpContext::new` helper (`crates/utils/src/vk_mcp_context.rs:7`). - Container now builds the context directly from the execution/task data without extra lookups or name fields, then serializes via `serde_json::to_string` (`crates/local-deployment/src/container.rs:826`). - MCP server reuses `serde_json::from_str` to hydrate the env payload—no custom helpers needed (`crates/server/src/mcp/task_server.rs:250`). `cargo check` All review notes addressed; context stays minimal and derived straight from the execution context. - Context constructor now takes everything from the `ExecutionContext` itself—no extra project fetch or manual process ID threading—so we pass `execution_ctx.execution_process.id` (`crates/local-deployment/src/container.rs:826`). - `VkMcpContext::new` remains the lightweight helper; no other fields changed. - `cargo check` still green. That should satisfy the review comment; let me know if you want the helper to move inside `ExecutionContext` itself later. You’re right to call this out. The problem is that I kept reconstructing the MCP payload by pulling individual fields out of `execution_ctx` instead of passing the whole context through a helper. That meant I was effectively duplicating the same data extraction in several spots, which is exactly what you asked me to avoid. Even after I added `VkMcpContext::new`, I was still feeding it a pile of individual values and even chasing the project row myself. The fix is straightforward: provide a helper that takes the database `ExecutionContext` (already returned by `ExecutionProcess::load_context`) and use that directly. I’ve added `VkMcpContext::from_execution(&execution_ctx)` and switched the container to call it, so we call `serde_json::to_string(VkMcpContext::from_execution(&execution_ctx))`. No extra project lookups, no manual argument lists, and the helper itself is exactly where you expect it. Let me know if you want to drop the old `new(...)` constructor now that the context-based helper exists. Simplify rebase fixes * add VK_MCP_CONTEXT_JSON env var to MCP config * Version with vk context endpoint (vibe-kanban aa3bbf5f) In the last few commits we added the vk mcp context. I wanna refactor it to instead be an endpoint on normal vibe kanban, .../context, it takes a path in the body. It then uses a mehtod simialar to what's used for cleanup_orphaned_worktrees in crates/local-deployment/src/container.rs We don't inject context using env anymore. If the path is without vk context the mcp should return that info, too * Revert "add VK_MCP_CONTEXT_JSON env var to MCP config" This reverts commit c26ff986fc3533d63d4309b956b6d16f0fb0fa5b. * Revert "**MCP Context Wiring**" This reverts commit 62fc206dc79543fcee7895e2587eb790f019ddbb. * Revert "**MCP Context Wiring**" This reverts commit 0e988c859036124f0ccb5aef578c83b12b708e1a. * Move ctx endpoint to container * Prompts * Cleanup * Fetch context on init * surface error * comments * Rename to context

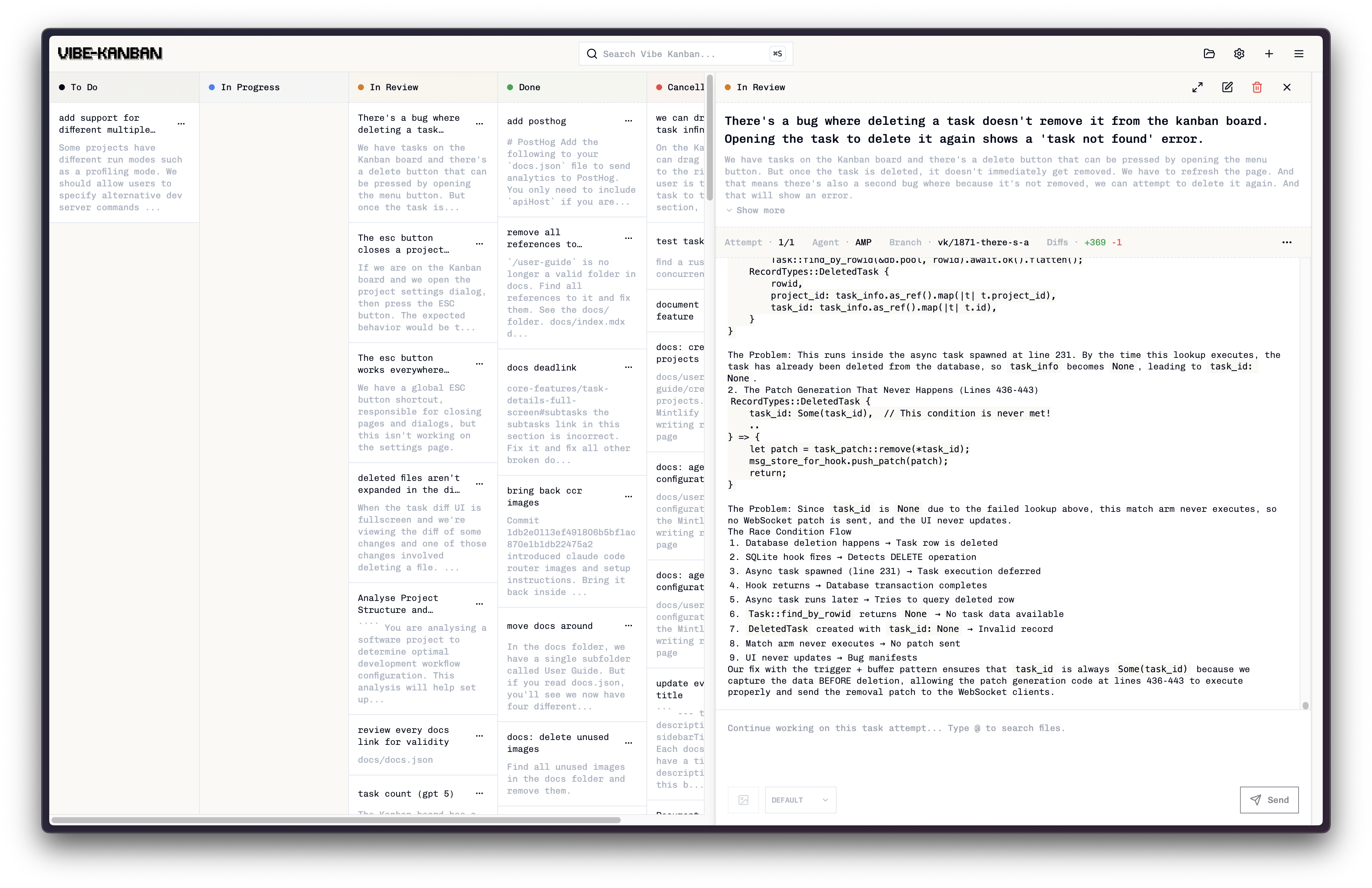

Get 10X more out of Claude Code, Gemini CLI, Codex, Amp and other coding agents...

Overview

AI coding agents are increasingly writing the world's code and human engineers now spend the majority of their time planning, reviewing, and orchestrating tasks. Vibe Kanban streamlines this process, enabling you to:

- Easily switch between different coding agents

- Orchestrate the execution of multiple coding agents in parallel or in sequence

- Quickly review work and start dev servers

- Track the status of tasks that your coding agents are working on

- Centralise configuration of coding agent MCP configs

- Open projects remotely via SSH when running Vibe Kanban on a remote server

You can watch a video overview here.

Installation

Make sure you have authenticated with your favourite coding agent. A full list of supported coding agents can be found in the docs. Then in your terminal run:

npx vibe-kanban

Documentation

Please head to the website for the latest documentation and user guides.

Support

We use GitHub Discussions for feature requests. Please open a discussion to create a feature request. For bugs please open an issue on this repo.

Contributing

We would prefer that ideas and changes are first raised with the core team via GitHub Discussions or Discord, where we can discuss implementation details and alignment with the existing roadmap. Please do not open PRs without first discussing your proposal with the team.

Development

Prerequisites

Additional development tools:

cargo install cargo-watch

cargo install sqlx-cli

Install dependencies:

pnpm i

Running the dev server

pnpm run dev

This will start the backend. A blank DB will be copied from the dev_assets_seed folder.

Building the frontend

To build just the frontend:

cd frontend

pnpm build

Build from source

- Run

build-npm-package.sh - In the

npx-clifolder runnpm pack - You can run your build with

npx [GENERATED FILE].tgz

Environment Variables

The following environment variables can be configured at build time or runtime:

| Variable | Type | Default | Description |

|---|---|---|---|

POSTHOG_API_KEY |

Build-time | Empty | PostHog analytics API key (disables analytics if empty) |

POSTHOG_API_ENDPOINT |

Build-time | Empty | PostHog analytics endpoint (disables analytics if empty) |

BACKEND_PORT |

Runtime | 0 (auto-assign) |

Backend server port |

FRONTEND_PORT |

Runtime | 3000 |

Frontend development server port |

HOST |

Runtime | 127.0.0.1 |

Backend server host |

DISABLE_WORKTREE_ORPHAN_CLEANUP |

Runtime | Not set | Disable git worktree cleanup (for debugging) |

Build-time variables must be set when running pnpm run build. Runtime variables are read when the application starts.

Remote Deployment

When running Vibe Kanban on a remote server (e.g., via systemctl, Docker, or cloud hosting), you can configure your editor to open projects via SSH:

- Access via tunnel: Use Cloudflare Tunnel, ngrok, or similar to expose the web UI

- Configure remote SSH in Settings → Editor Integration:

- Set Remote SSH Host to your server hostname or IP

- Set Remote SSH User to your SSH username (optional)

- Prerequisites:

- SSH access from your local machine to the remote server

- SSH keys configured (passwordless authentication)

- VSCode Remote-SSH extension

When configured, the "Open in VSCode" buttons will generate URLs like vscode://vscode-remote/ssh-remote+user@host/path that open your local editor and connect to the remote server.

See the documentation for detailed setup instructions.